02-Aug-2025 , Updated on 8/3/2025 10:56:13 PM

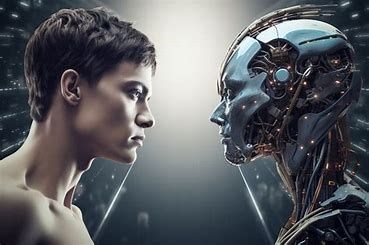

Human vs. Machine Decision-Making in War

Ethical Accountability Demands Human Oversight

Human judgment is required on lethal war decisions regarding ethical responsibility. A kill choice not made by a human being will lead to accountability gaps in autonomous weapons systems. Machines do not have moral agency or the ability to make judgment as demanded by the international humanitarian law. Technology should be able to provide information that will guide the decisions made but in the actual sense the human beings should be the commanders of whether to start violence. It is only man that can apply discretion by observing context, proportionality and uniqueness of situations, when deciding which action to take and which not to take. This will make certain individuals be accountable legally and morally to the violations. It is morally unacceptable to entrust life-death decisions to computers; oversight cannot be passed to a computer.

Algorithmic Speed Versus Contextual Judgment

Algorithms provide war with unsurpassed speed and can render decisions and respond faster than people. Such speed provides military tactical advantages in time-sensitive situations. But race-based decision-making, by its nature, takes out locally contextualised judgment. The artificial intelligence of human cognition involves situational awareness, moral judgment, cultural knowledge as well as ambiguity of intention; none of which is in the scope of algorithmic processes. Being too dependent on the speed of calculations carries a potential of serious errors: they may be inaccurate identification and harm individuals who are not combatants or lead to inadequate escalation based on the erroneously interpreted information. As much as speed is useful in operations, it is human judgment that brings success when the factors of complexity of ethical boundaries, proportionality, and necessity in the battlefield are involved. Military decision-making processes involved in war require finding the balance between the machine-speed use of algorithms and the necessary human decision-making.

Bias in Data Risks Unintended Escalation

Machine decision-making in warfare is based on biased training data, which generates high escalation risks. Algorithms run on historically biased data, causing the biased historical data to propagate to the model, demonstrating some bias. These outputs may suggest use of unnecessary force, classify neutral entities as hostile, or make bad estimates of enemy reactions. Human operators, who might be over-trusting of the apparent objectivity of the technology, or because they are under operational stress, these biased recommendations may not be scrutinised sufficiently. This is a perfect amalgamation of faulty design recommendations in addition to algorithmic forecasting and constrained human judgement that allows misunderstandings to take place. Operation in good faith when using the biased data may result in disproportionate force and unintended targeting as well as uncontrollable quick escalation in conflict areas. The only way to mitigate this risk is to have vigorous validation of data and enforced human checks protocols.

Unintended Consequences: Civilian Harm Risk

The subject of mitigating unintended civilian harm is the focus of the human versus machine decision-making debate in warfare. Human operators have the benefit of contextual judgment, but are subject to fatigue, bias, stress and information overload, and also are prone to the possibility of catastrophic errors. Automates that are speedy and give consistent decisions do not have real situational awareness and virtue ethics. They will remain reliant solely on training information and algorithms, which pose a risk of false positives or negatives, unexpected interactions in complex situations, and a gap in responsibility when they fail. Such concern has been proved by facts-historical evidence shows that not only are human mistakes a factor that can lead to the killing of civilians, but algorithmic errors are as well. Neither paradigm necessarily requires the removal of the existence of the inherent risk of cadent burdens due to warfighting decisions. Strict protective guardrails and checks of accountability shall be necessary no matter which decision-maker is made.

Hybrid Systems Require Stringent Safeguards

Incorporating artificial intelligence into the command systems of the military raises the urgent need to ensure that fatal judgments are safeguarded. Hybrid frameworks bring about severe vulnerabilities Excessive use of algorithmic suggestions will effectively begin to desensitise the human operators and introduce ambiguity with any incorrect results. Machines do not have contextual knowledge and moral intelligence as well as legal prowess required to engage in lawful warfare. High protection levels are not negotiable. Such should involve unequivocal human procedures in authorizing the launching of the weapon, constant human surveillance with the option to first vet the orders, stringent test runs against bias and malfunction and having unquestionable legal decisions that would cause liability to the human behind life and death consequences.

Content Writer

Hi, I’m Meet Patel, a B.Com graduate and passionate content writer skilled in crafting engaging, impactful content for blogs, social media, and marketing.

Join Our Newsletter

Subscribe to our newsletter to receive emails about new views posts, releases and updates.

Copyright 2010 - 2026 MindStick Software Pvt. Ltd. All Rights Reserved Privacy Policy | Terms & Conditions | Cookie Policy